Handling Context Length Exceeded Error in AI Assistant

App will soon be removed

The AI Assistant for Confluence will be discontinued and removed 1. May 2026 and no longer be working.

When utilizing the ChatGPT plugin for Confluence, you may sometimes encounter the context length limit, which could affect the AI's response abilities for more extensive content.

That's why we've implemented an option that adjusts how you interact with the application. This feature allows you to limit the content submitted to ChatGPT - restricting it to the original/beginning parts of an article to comply with the context limitation. This adjustment ensures effective and seamless utilization of the plugin.

It's important to understand that when you engage this option, the entirety of the article will not be sent to ChatGPT. Instead, only the beginning or the initial section of the article will be analyzed - meaning that your results may relate only to the beginning of your content. However, this approach guarantees compliance with the context limit boundaries, thus enabling a convenient, smooth interaction with our innovative plugin.

Enable article token limitation

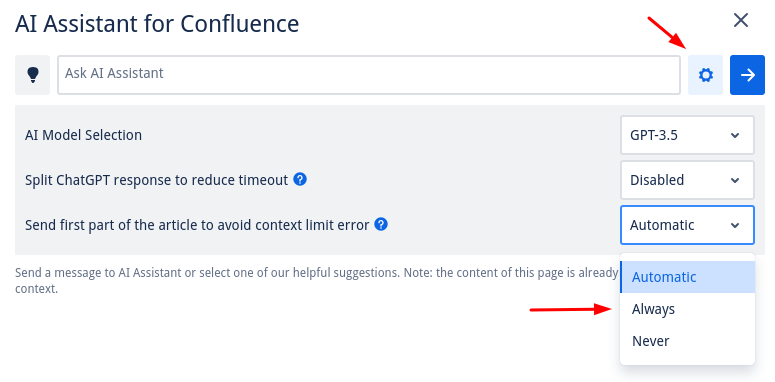

To enable the option, please click the gear icon situated near the ‘send' button. A dropdown menu will appear. From this menu, select the 'Automatic' or 'Always’ option.

If ‘Always’ is selected, your plugin setup will only send the initial part of the article that fits within the context limit to ChatGPT. This option aids in ensuring compatibility with the set context limits and enhances your interaction with the plugin. Remember, enabling this feature signifies the tool will focus only on the beginning of your content for generating responses.

If ‘Automatic’ is selected, which is the default option, it is only enabled when the article is too long.

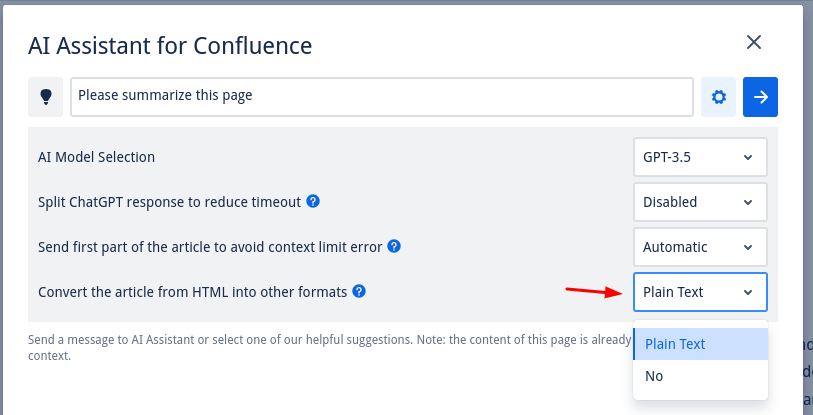

Convert the article from HTML into other formats

In some cases, converting your article into a format with fewer attributes can assist in minimizing the total token count, aiding in the compliance with the context length limit. Currently, our ChatGPT plugin offers a feature that allows you to convert the HTML article format into a simpler format: plain text. This feature significantly reduces the token count from the article, as plain text format eliminates additional elements present in HTML, like tags, styles, and other complex attributes. This function facilitates a smoother, effective interaction with the context limitation, ensuring you can utilize the AI response capability with much convenience.